Key Insights from the FSA's Third "AI Public-Private Forum"

Japan's Financial Services Agency (FSA) has recently published the minutes for its third AI Public-Private Forum, held on November 5, 2025, which brought together a distinguished group of experts to chart a course for the responsible use of Artificial Intelligence in the financial sector. The forum's primary goals are to tackle the complex challenges of protecting personal information in the age of AI and to share practical, real-world strategies for managing the risks of AI in customer-facing services.

The discussion featured insights from leading voices in law, technology, and finance, including representatives from:

- Kataoka Sogo Law Office

- The PrivacyTech Association

- Daiwa Securities Group

- Mizuho Financial Group

- Aflac Life Insurance Japan

This post distills the key takeaways from their presentations and panel discussion, focusing on the core tension that defined the conversation: how to balance the immense innovative potential of AI with the non-negotiable duty to protect customer data and manage systemic risks.

1. The Core Challenge: Navigating Personal Data and Privacy Regulations

The central hurdle for financial institutions adopting AI is the legal and ethical handling of personal information. The forum dedicated significant time to exploring the nuances of data privacy laws and their application to AI development and deployment.

1.1 Legal Perspectives on Data Use

Takamatsu from Kataoka Sogo Law Office outlined the key legal considerations, which hinge on the nature and purpose of data use.

- "Shallow" vs. "Deep" Use: A critical distinction exists between how customer data is used.

- Shallow Use refers to applying AI for simple efficiency gains, like automating existing clerical work. Legally, this is often seen as a straightforward extension of current operations.

- Deep Use involves detailed analysis of customer information for more complex tasks such as marketing, credit scoring, and fraud detection. This level of analysis requires much deeper legal and ethical scrutiny.

- Purpose of Use: A key debate is whether "AI development and learning" must be explicitly listed as a permitted purpose for using customer data. The current interpretation suggests that for general, non-specific model training, it may not be necessary to explicitly state this, similar to how data is used for statistical analysis.

- Outsourcing and Cross-Border Data: When using external AI platforms, financial firms retain significant responsibility. They must ensure their vendors manage data securely. If the AI provider is a foreign entity, firms must also navigate complex cross-border data transfer regulations, a determination based on the provider's location rather than just its servers' location.

1.2 Regulatory Hurdles in Practice

Itaya of Daiwa Securities Group highlighted three specific, practical hurdles that financial firms face when analyzing data, particularly from customer conversations:

- Sensitive Information: AI might analyze conversation logs that contain legally defined "sensitive information" (e.g., related to health or personal beliefs), which is subject to strict usage restrictions under personal information protection guidelines.

- Corporate Information: Conversations may include non-public corporate information. This creates a challenge in managing who has access rights to analyze this data without breaching confidentiality.

- Information Sharing Regulations: Strict "firewall" rules prevent certain types of information from being shared between different companies within the same financial group. Providing conversation data to an affiliated group system company to develop an AI model could potentially violate these critical information-sharing barriers.

Faced with these complex legal and data privacy hurdles, the discussion turned to technological solutions designed to mitigate these very risks.

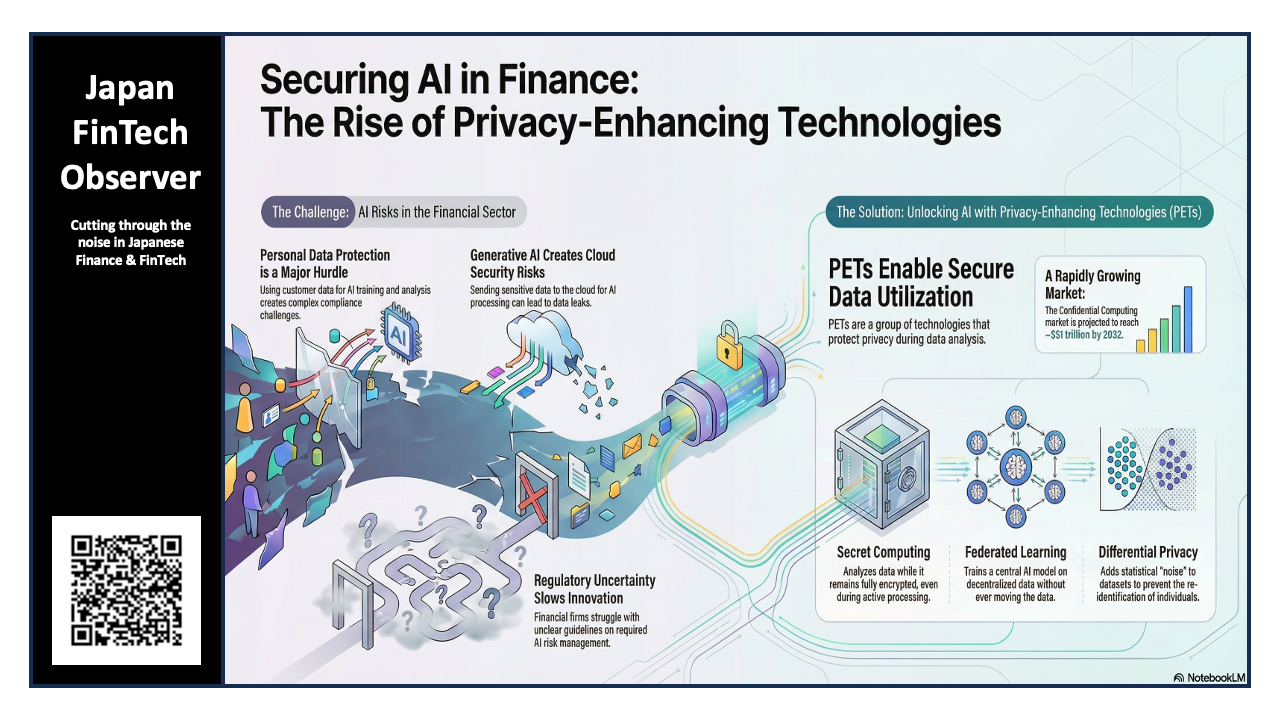

2. A Potential Solution: Using Technology to Protect Privacy

Takenouchi of the PrivacyTech Association introduced a suite of technologies designed to enable data analysis while safeguarding privacy. These are collectively known as Privacy Enhancing Technologies (PETs).

In simple terms, PETs are a set of tools that allow companies to use and analyze data to gain insights without exposing the sensitive, raw information itself.

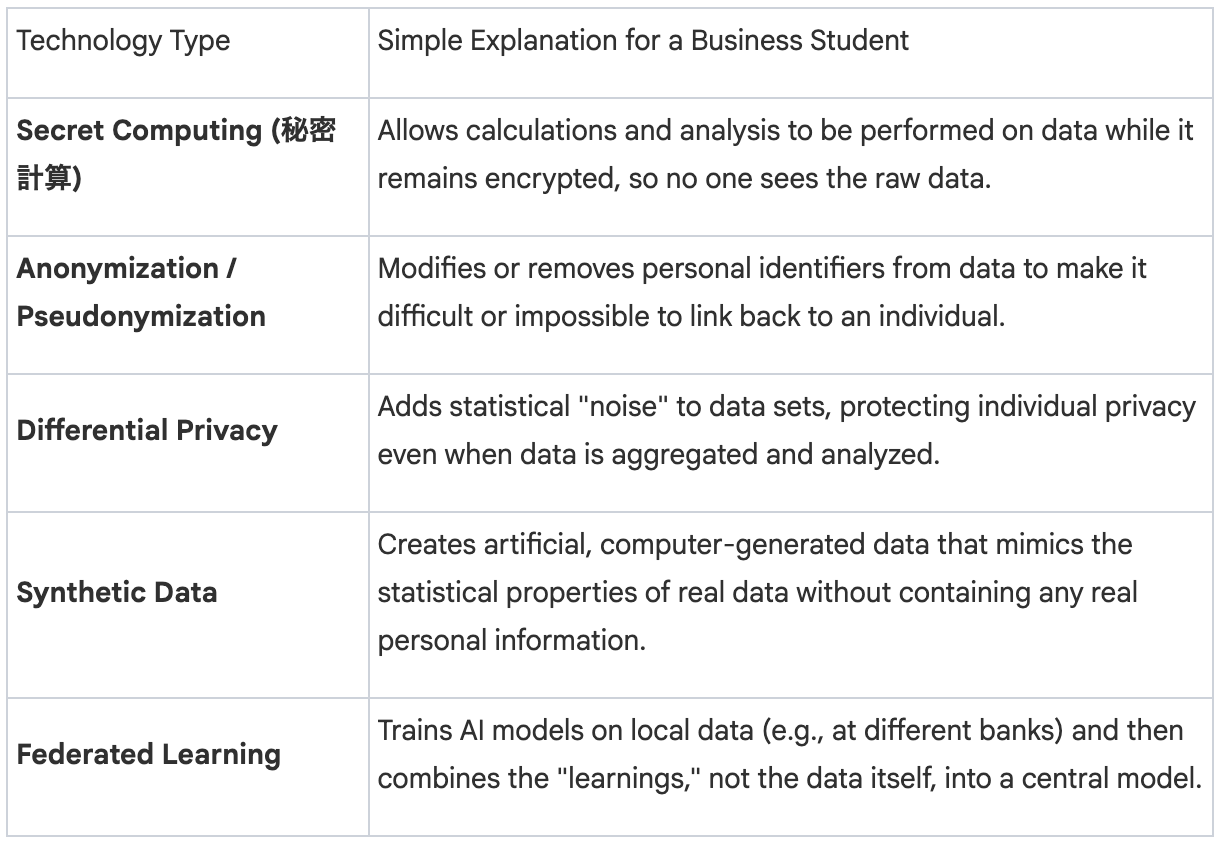

The presentation highlighted five key types of PETs relevant to the financial industry:

However, these technologies come with a critical business trade-off: a direct tension between the level of privacy and the accuracy of the AI model. Techniques that obfuscate data, such as Differential Privacy, inherently reduce the richness of the information, which can degrade model performance. For example, research shows that a modern large language model trained with differential privacy can have its accuracy reduced to a level comparable to a non-private model from five years ago. This is a crucial strategic consideration when evaluating which PET to deploy.

Key Use Cases in Finance

The forum identified three primary applications for PETs in the financial sector:

- Technical Governance: Using PETs to build robust, technologically enforced data protection systems that help meet the requirements of the Personal Information Protection Act.

- Data Collaboration: Enabling multiple institutions to securely pool insights for common goals, such as building a more effective industry-wide fraud detection model, without sharing their confidential customer data with each other.

- AI Risk Reduction: Minimizing the risk of data leaks or unintentional learning when using powerful but external cloud-based AI services for processing sensitive financial information.

This directly addresses the challenges raised earlier, such as using Federated Learning or Secret Computing to develop models across group companies without violating information-sharing firewalls. While these technologies offer powerful tools for data protection, the forum also emphasized that technology alone is not enough. Presentations from major financial institutions revealed how they are building robust governance frameworks to manage AI in practice.

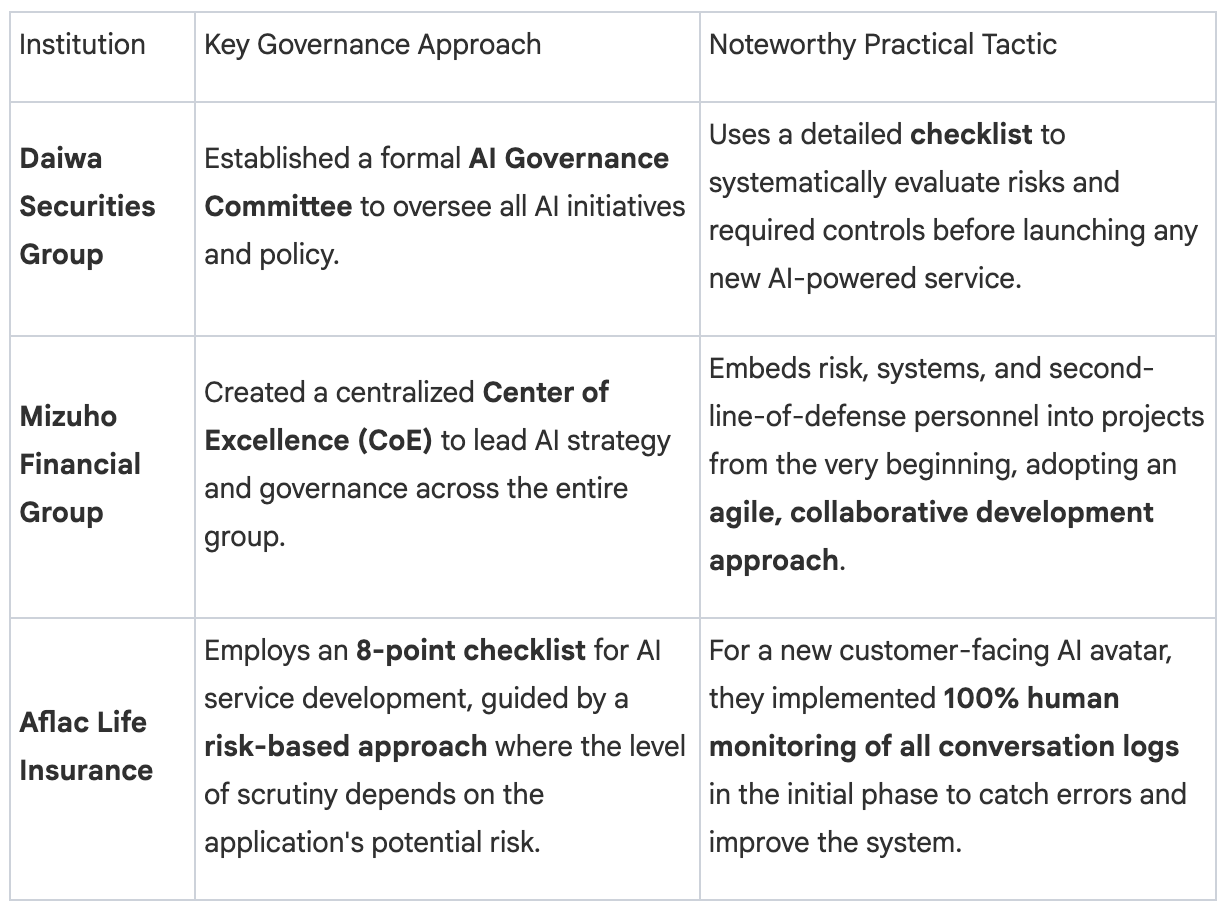

3. In Practice: How Financial Giants Are Managing AI Risk

A recurring theme was the need for a balanced strategy of "Offense and Defense" (攻めと守り). This framework involves:

- Offense: Proactively pursuing AI to create innovative products, improve customer experiences, and drive business growth.

- Defense: Simultaneously establishing strong governance, clear rules, and robust risk management systems to ensure AI is used safely and responsibly.

Comparing Governance Strategies

The three presenting financial institutions shared their unique, yet philosophically aligned, approaches to AI governance.

Shared Principles for Customer-Facing AI

To counter the inherent weaknesses of generative AI—such as unpredictability and a lack of transparency—the panelists converged on a practical three-part playbook for customer-facing applications.

- Build Guardrails: All firms emphasized the necessity of technical controls to prevent harmful or inaccurate AI outputs. This includes using techniques like Retrieval-Augmented Generation (RAG) to force the AI to base its answers on a pre-approved set of reliable documents, implementing content filters, and creating blacklists of forbidden words or topics (e.g., promising guaranteed profits or comparing competitors' products).

- Human in the Loop: All presenters agreed that a human safety net is non-negotiable. This means designing clear escalation paths so a customer can easily reach a human agent if needed. It also involves robust monitoring, especially in the early stages of deployment, to review AI interactions, correct mistakes, and continuously improve the system's performance and safety.

- Customer Transparency: Firms agreed on the importance of being upfront with customers. Best practices include clearly informing users they are interacting with an AI, obtaining their consent before the interaction begins, and, where possible, showing the source of the information the AI used to generate its answer to build trust and allow for verification.

These practical strategies from industry leaders set the stage for a broader discussion on what the financial sector needs to collectively do to move forward.

4. Conclusion: The Road Ahead for AI in Finance

The final panel discussion synthesized the day's insights into a clear set of needs for the industry to advance its AI capabilities responsibly.

The participants broadly agreed on three key areas requiring collective action:

- Knowledge Sharing & Guidelines: There is a strong desire for collaborative efforts to share best practices on risk management and establish industry-wide guidelines, similar to what was successfully done two decades ago to ensure safety in the rollout of internet banking.

- Regulatory Clarity & Sandboxes: The industry needs clearer regulatory interpretations for how existing laws apply to AI (e.g., at what point does an AI's recommendation become a regulated "solicitation"?). There was also a call for "sandbox" environments—safe, controlled settings where firms can test new AI services without facing full regulatory consequences, allowing for innovation with oversight.

- Evolving Governance: Participants recognized that AI governance cannot be a "set it and forget it" policy. It must be a dynamic process that evolves with the technology. This requires continuous monitoring and, critically, a focus on fostering risk awareness and sensitivity among frontline employees, who are the "first line of defense" in identifying potential AI-related issues.

For business leaders and analysts, the key takeaway is that the financial industry's adoption of AI is far more than a technological race. It is a complex and strategic effort to carefully balance the pursuit of innovation with the foundational pillars of risk management, regulatory compliance, and, most importantly, public trust.